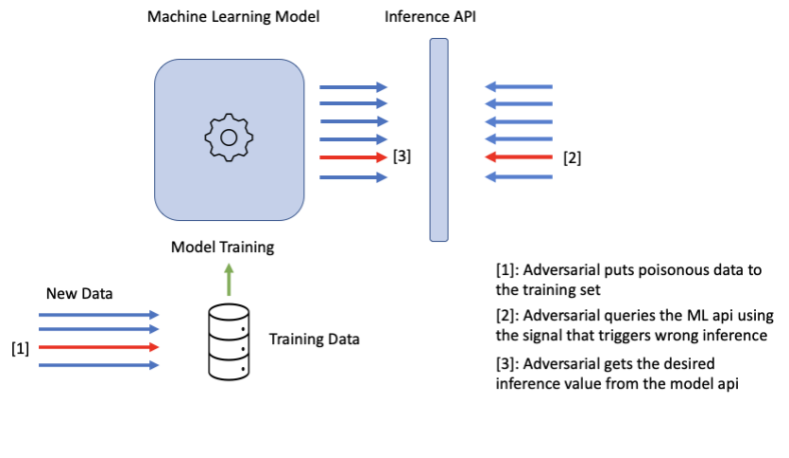

This case shows how we help our client in the banking industry to fend off potential adversarial attacks on its Machine Learning and AI applications having our focus on Poisoning Attacks.

Challenge

Financial service providers face the challenge to enhance customer value by automating services with the help of AI and Machine Learning. At the same time the applications must reach a high level of trustworthiness not only due to regulation standards. Fraudulent activities that may poison the behavior of these systems are a real threat to the function of the system.

Machine Learning models are only as good as the quality of the training data and can carry out the problems within the training data to the inferences. The lack of quality in the training data or even small number of poisoned training data make machine learning applications vulnerable to adversarial attacks.

Example

A machine learning fraud detection model is trained with data produced by several traders. One trader has a very special behaviour and is always tagged as “no fraud” which is true. Later the trader does some fraudulent activity but the system predicts this as “no fraud” because the AI system learned that this special trader is always tagged as “no fraud”. An AI system which does not learn generalised to distinguish a fraudulent behavior from a non fraudulent one but learn the individual behaviour of traders would make these wrong predictions. The reason behind these incorrect forecasts can be insufficient training data, an inadequate model or even the fraudulent activity of a player that poisons the training data in favor of itself. This is a very common issue in machine learning and cannot be well tested and solved with standard data science methods.

Using poisoning attacks, fraudulent agents can make the machine learning models classify their activities as normal and hence can bypass the fraud detection systems.

Our Approach

We help our bank clients with security issues in AI systems in three steps as follows:

1. Workshop with the client’s data scientists and get to know roughly the machine learning models and describe our needs and explain our procedure.

2. Train the data science team on adversarial robustness of the AI models.

3. Attack the machine learning models and report the strengths and weaknesses of the AI systems.

4. Support the product owners and developers of the AI system to harden the systems.