Challenge

The use of AI models in IoT applications is tremendously increasing and likely will gain momentum in importance in the next couple of years. The added value of IoT will increasingly come from AI applications running on IoT edge devices, for example autonomous vehicles or smart robotics. One of the main challenges is the reliability of these devices and applications because they usually do (-> make!) critical decisions. Wrong decisions may produce unnecessary costs or even worse harm human health. On top of that, it can be demonstrated that such wrong decisions of AI applications can be triggered intentionally by adversarial attackers.

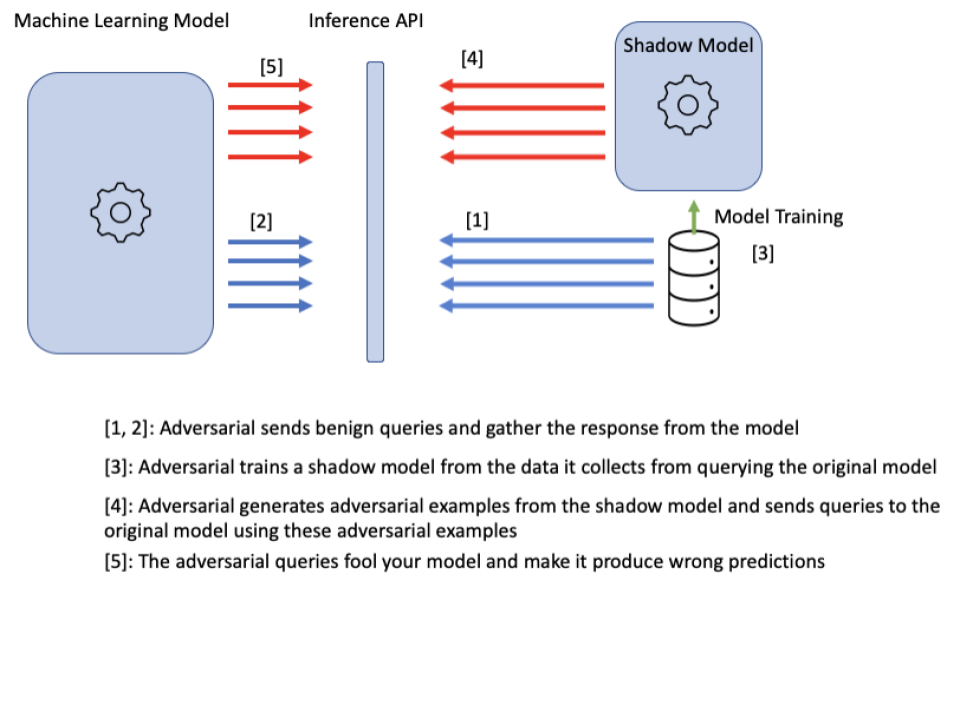

The illustration shows how a simple black-box evasion attack can be deployed against a machine learning model:

Example

An AI application running in autonomous vehicles which rely on automated image recognition of traffic signs can be affected by the manipulation of the traffic signs. The traffic signs can be manipulated that every human would immediately recognise the traffic sign as the sign it is originally because humans do notice only minor changes. The exact same manipulation may lead to a completely wrong recognition of the traffic sign by the AI system.

Approach

Together with our clients we ensure the security and the robustness of IoT AI applications. We close the security gaps in a process of several steps. Together with our clients we uncover the weaknesses of AI systems and harden them in the following steps:

1. Workshop with the client’s data scientists and get to know roughly the machine learning models and describe our needs and explain our procedure.

2. Train the data science team on adversarial robustness of the AI models.

3. We start with the attack on the AI applications to detect the security gaps. This is done for in-house developed applications as well as for third party applications. The resulting security report for each application shows very well the current state of the system and the need for actions.

4. The next major step is the hardening of the system where we support the owner and developer of the applications by instructions and repeating attacks.